I’ve always found ROC curves a little confusing. Particularly when it comes to ROC curves with imbalanced classes. This blog post is an exploration into receiver operating characteristic (i.e. ROC) curves and how they react to imbalanced classes.

I start by loading the necessary libraries.

1 2 3 4 | |

Seed the random number generator so that everything here is reproducible.

1

| |

I write a few functions that will create fake date, plot fake date, and plot ROC curves.

I describe each function in turn below:

- grab_probability draws a sample of "probabilities" drawn from a uniform distribution bound between 0 and 1.

- create_fake_binary_data creates a vector of 0s and 1s. The mean of the vector is controlled by the positive input.

- probability_hist plots a normalized histogram (each bar depicts the proportion of data in it) bound between 0 and 1.

- plot_roc_curve does not need an explanation.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | |

I have found one of the best ways to learn about an algorithm is to give it fake data. That way, I know the data, and can examine exactly what the algorithm does with the data. I then change the data and examine how the algorithm reacts to this change.

The first dataset I create is random data with balanced classes.

I create probability with the grab_probability function. This is a vector of numbers between 0 and 1. These data are meant to simulate the probabilities that would be produced by a model that is no better than chance.

I also create the vector y which is random ones and zeroes. I will call the ones the positive class and the zeroes the negative class.

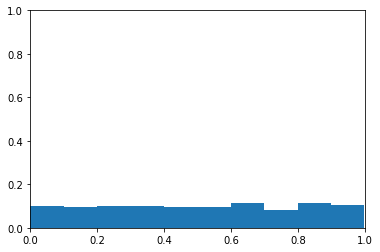

The plot below is a histogram of probability. The y-axis is the proportion of samples in each bin. The x-axis is probability levels. You can see the probabilities appear to be from a uniform distribution.

1 2 3 4 5 6 7 | |

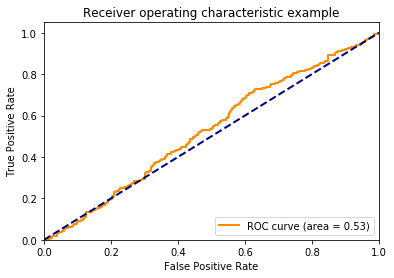

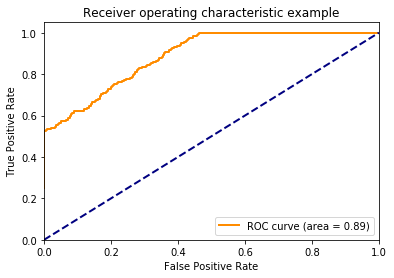

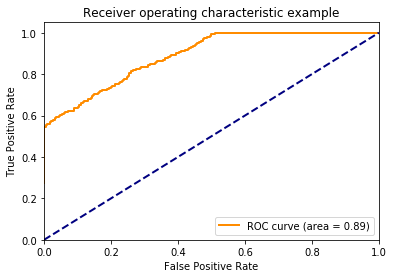

There’s no association between y and the probability, so I don’t expect the area under the curve to be different than chance (i.e., have an area under the curve of about 0.5). I plot the ROC curve to confirm this below.

1 2 3 4 | |

Let’s talk about the axes here. The y-axis is the proportion of true positives (i.e., TPR - True Positive Rate). This is how often the model correctly identifies members of the positive class. The x-axis is the proportion of false positives (FPR - False Positive Rate). This how often the model incorrectly assigns examples to the positive class.

One might wonder how the TPR and FPR can change. Doesn’t a model always produce the same guesses? The TPR and FPR can change because we can choose how liberal or conservative the model should be with assigning examples to the positive class. The lower left-hand corner of the plot above is when the model is maximally conservative (and assigns no examples to the positive class). The upper right-hand corner is when the model is maximally liberal and assigns every example to the positive class.

I used to assume that when a model is neutral in assigning examples to the positive class, that point would like halfway between the end points, but this is not the case. The threshold creates points along the curve, but doesn’t dictate where these points lie. If this is confusing, continue to think about it as we march through the proceeding plots.

The ROC curve is the balance between true and false positives as a threshold varies. To help visualize this balance, I create a function which plots the two classes as a stacked histogram, cumulative density functions, and the relative balance between the two classes.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

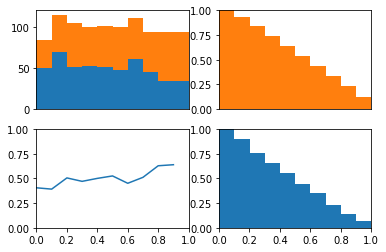

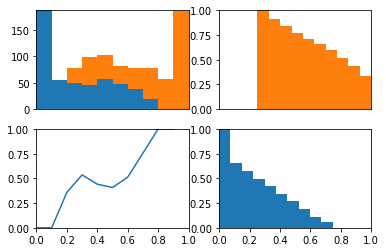

The idea behind this plot is we can visualize the model’s threshold moving from LEFT to RIGHT through the plots. As the threshold decreases, the model will guess the positive class more often. This means more and more of each class will be included when calculating the numerator of TPR and FPR.

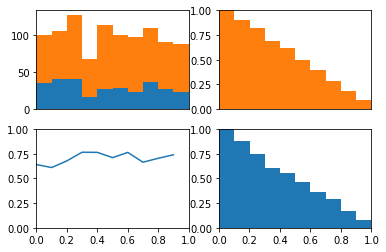

The top left plot is a stacked histogram. Orange depicts members of the positive class and blue depicts members of the negative class. On the x-axis (of all four plots) is probability.

If we continue thinking about the threshold as decreasing as the plots moves from left to right, we can think of the top right plot (a reversed CDF of the positive class) as depicting the proportion of the positive class assigned to the positive class as the threshold varies (setting the TPR). We can think of the bottom right plot (a reversed CDF of the negative class) as depicting the proportion of the negative class assigned to the positive class as the threshold varies (setting the FPR).

In the bottom left plot, I plot the proportion of positive class that falls in each bin from the histogram in the top plot. Because the proportion of positive and negative class are equal as the threshold varies (as depicted in the bottom plot) we consistently assign both positive and negative examples to the positive class at equal rates and the ROC stays along the identity and the area under the curve is 0.5.

1 2 3 | |

Next, I do the same process as above but with fake probabilities that are predictive of the label. The function biased_probability produces probabilities that tend to be greater for the positive class and lesser for the negative class.

1 2 3 4 5 6 7 | |

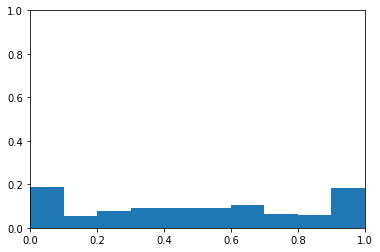

I create this data for a balanced class problem again. using the same y vector, I adjust the probabilities so that they are predcitive of the values in this y vector. Below, you can see the probability data as a histogram. The data no longer appear to be drawn from a uniform distribution. Instead, there are modes near 0 and 1.

1 2 3 | |

Now, we get a nice roc curve which leaves the identity line. Not surprising since I designed the probabilities to be predictive. Notice how quickly the model acheives a TPR of 1. Remember this when looking at the plots below.

1 2 3 4 | |

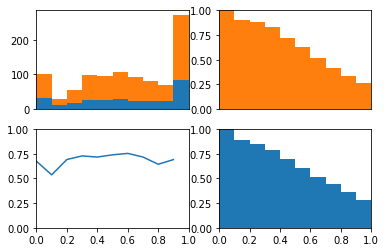

In the upper left plot below, we can clearly see that the positive class occurs more often than the negative class on the right side of the plot.

Now remember that the lower left hand side of the roc plot is when we are most conservative. This corresponds to the right hand side of these plots where the model is confident that these examples are from the positive class.

If we look at the cdfs of right side. We can see the positive class (in orange) has many examples on the right side of these plots while the negative class (in blue) has no examples on this side. This is why the TPR immediately jumps to about 0.5 in the roc curve above. We also see the positive class has no examples on the left side of these plots while the negative class has many. This is why the TPR saturates at 1 well before the FPR does.

In other words, because there model is quite certain that some examples are from the positive class the ROC curve quickly jumps up on the y-axis. Because the model is quite certain as to which examples are from the negative class, the ROC curves saturates on the y-axis well before the end of the x-axis.

1 2 3 | |

After those two examples, I think we have a good handle on the ROC curve in the balanced class situation. Now let’s make some fake data when the classes are unbalanced. The probabilities will be completely random.

1 2 3 4 5 6 7 8 9 10 | |

Average Test Value: 0.70

Average Probability: 0.49

Again, this is fake data, so the probabilities do not reflect the fact that the classes are imbalanced.

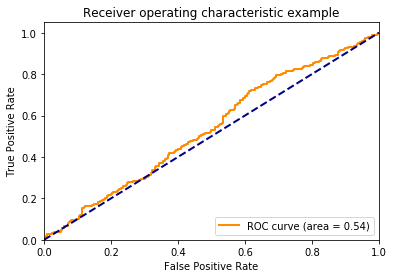

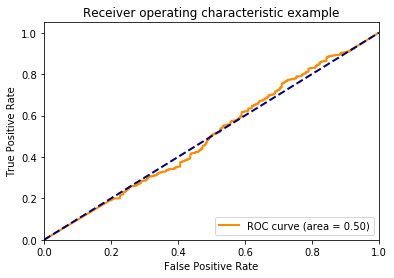

Below, we can see that the ROC curve agrees that the data are completely random.

1 2 3 4 | |

1

| |

Now, lets create biased probabilities and see if the ROC curve differs from chance

1 2 3 4 5 6 7 8 | |

It does as we expect.

1

| |

Importantly, the probabilities now reflect the biased classes

1

| |

0.602536255717

Using these same probabilities, lets remove the relationship between the probabilities and the output variable by shuffling the data.

1

| |

1 2 3 4 | |

Beautiful! the ROC curve stays on the identity line. We can see that this is because while the positive class is predicted more often, the positive class is evently distributed across the different thresholds.

1

| |

Importantly, this demonstrates that even with imbalanced classes, if a model is at chance, then the ROC curve will reflect this chance perforomance. I do a similar demonstration with fake data here.

1 2 | |

CPython 3.6.3

IPython 6.1.0

numpy 1.13.3

matplotlib 2.0.2

sklearn 0.19.1

compiler : GCC 7.2.0

system : Linux

release : 4.13.0-36-generic

machine : x86_64

processor : x86_64

CPU cores : 4

interpreter: 64bit