In this post I wanted to do a quick follow up to a previous post about predicting career nba performance from rookie year data.

After my previous post, I started to get a little worried about my career prediction model. Specifically, I started to wonder about whether my model was underfitting or overfitting the data. Underfitting occurs when the model has too much “bias” and cannot accomodate the data’s shape. Overfitting occurs when the model is too flexible and can account for all variance in a data set - even variance due to noise. In this post, I will quickly re-create my player prediction model, and investigate whether underfitting and overfitting are a problem.

Because this post largely repeats a previous one, I haven’t written quite as much about the code. If you would like to read more about the code, see my previous posts.

As usual, I will post all code as a jupyter notebook on my github.

1 2 3 4 5 6 7 8 9 | |

Load the data. Reminder - this data is still available on my github.

1 2 3 4 5 6 7 8 | |

Load more data, and normalize it data for the PCA transformation.

1 2 3 4 5 6 7 8 | |

Use k-means to group players according to their performance. See my post on grouping players for more info.

1 2 3 4 5 6 7 8 | |

Run a separate regression on each group of players. I calculate mean absolute error (a variant of mean squared error) for each model. I used mean absolute error because it’s on the same scale as the data, and easier to interpret. I will use this later to evaluate just how accurate these models are. Quick reminder - I am trying to predict career WS/48 with MANY predictor variables from rookie year performance such rebounding and scoring statistics.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 | |

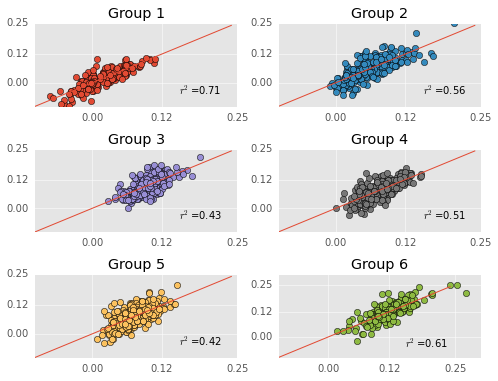

More quick reminders - predicted performances are on the Y-axis, actual performances are on the X-axis, and the red line is the identity line. Thus far, everything has been exactly the same as my previous post (although my group labels are different).

I want to investigate whether the model is overfitting the data. If the data is overfitting the data, then the error should go up when training and testing with different datasets (because the model was fitting itself to noise and noise changes when the datasets change). To investigate whether the model overfits the data, I will evaluate whether the model “generalizes” via cross-validation.

The reason I’m worried about overfitting is I used a LOT of predictors in these models and the number of predictors might have allowed the model the model to fit noise in the predictors.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

Group 0

Initial Mean Absolute Error: 0.0161

Cross Validation MAE: 0.0520

Group 1

Initial Mean Absolute Error: 0.0251

Cross Validation MAE: 0.0767

Group 2

Initial Mean Absolute Error: 0.0202

Cross Validation MAE: 0.0369

Group 3

Initial Mean Absolute Error: 0.0200

Cross Validation MAE: 0.0263

Group 4

Initial Mean Absolute Error: 0.0206

Cross Validation MAE: 0.0254

Group 5

Initial Mean Absolute Error: 0.0244

Cross Validation MAE: 0.0665

Above I print out the model’s initial mean absolute error and median absolute error when fitting cross-validated data.

The models definitely have more error when cross validated. The change in error is worse in some groups than others. For instance, error dramatically increases in Group 1. Keep in mind that the scoring measure here is mean absolute error, so error is in the same scale as WS/48. An average error of 0.04 in WS/48 is sizable, leaving me worried that the models overfit the data.

Unfortunately, Group 1 is the “scorers” group, so the group with most the interesting players is where the model fails most…

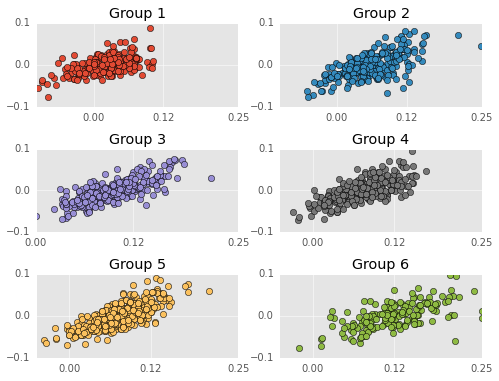

Next, I will look into whether my models underfit the data. I am worried that my models underfit the data because I used linear regression, which has very little flexibility. To investigate this, I will plot the residuals of each model. Residuals are the error between my model’s prediction and the actual performance.

Linear regression assumes that residuals are uncorrelated and evenly distributed around 0. If this is not the case, then the linear regression is underfitting the data.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

Residuals are on the Y-axis and career performances are on the X-axis. Negative residuals are over predictions (the player is worse than my model predicts) and postive residuals are under predictions (the player is better than my model predicts). I don’t test this, but the residuals appear VERY correlated. That is, the model tends to over estimate bad players (players with WS/48 less than 0.0) and under estimate good players. Just to clarify, non-correlated residuals would have no apparent slope.

This means the model is making systematic errors and not fitting the actual shape of the data. I’m not going to say the model is damned, but this is an obvious sign that the model needs more flexibility.

No model is perfect, but this model definitely needs more work. I’ve been playing with more flexible models and will post these models here if they do a better job predicting player performance.