In a previous post, I described how to do backpropogation with a 1-layer neural network. I’ve written this post assuming some familiarity with the previous post.

When first created, 1-layer neural networks brought about quite a bit of excitement, but this excitement quickly dissipated when researchers realized that 1-layer neural networks could only solve a limited set of problems.

Researchers knew that adding an extra layer to the neural networks enabled neural networks to solve much more complex problems, but they didn’t know how to train these more complex networks.

In the previous post, I described “backpropogation,” but this wasn’t the portion of backpropogation that really changed the history of neural networks. What really changed neural networks is backpropogation with an extra layer. This extra layer enabled researchers to train more complex networks. The extra layer(s) is(are) called the hidden layer(s). In this post, I will describe backpropogation with a hidden layer.

To describe backpropogation with a hidden layer, I will demonstrate how neural networks can solve the XOR problem.

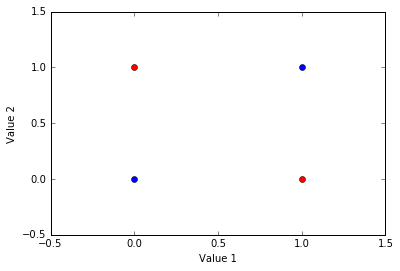

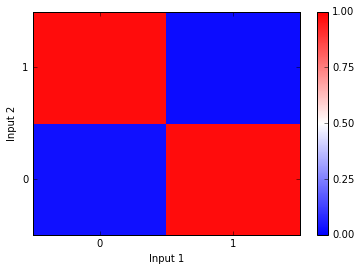

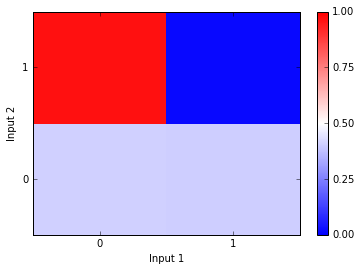

In this example of the XOR problem there are four items. Each item is defined by two values. If these two values are the same, then the item belongs to one group (blue here). If the two values are different, then the item belongs to another group (red here).

Below, I have depicted the XOR problem. The goal is to find a model that can distinguish between the blue and red groups based on an item’s values.

This code is also available as a jupyter notebook on my github.

1 2 3 4 5 6 7 8 9 10 | |

Again, each item has two values. An item’s first value is represented on the x-axis. An items second value is represented on the y-axis. The red items belong to one category and the blue items belong to another.

This is a non-linear problem because no linear function can segregate the groups. For instance, a horizontal line could segregate the upper and lower items and a vertical line could segregate the left and right items, but no single linear function can segregate the red and blue items.

We need a non-linear function to seperate the groups, and neural networks can emulate a non-linear function that segregates them.

While this problem may seem relatively simple, it gave the initial neural networks quite a hard time. In fact, this is the problem that depleted much of the original enthusiasm for neural networks.

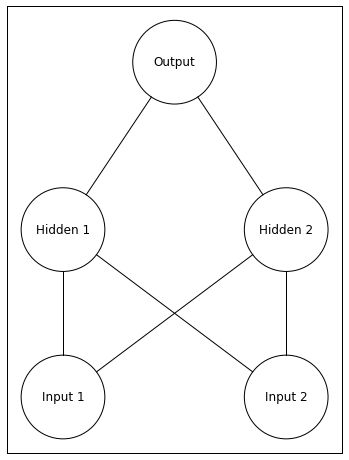

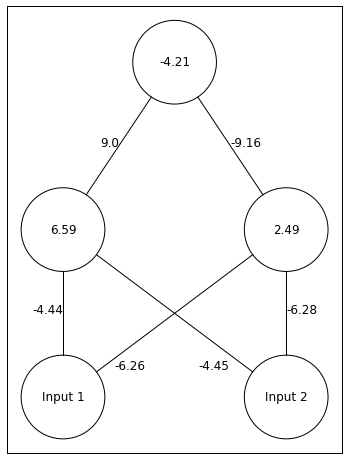

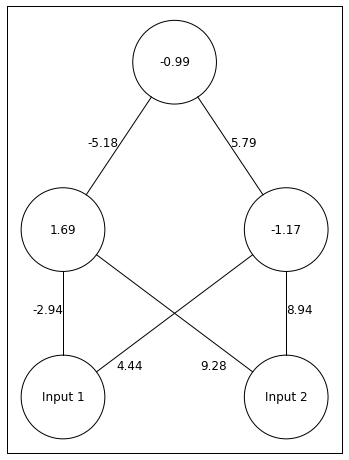

Neural networks can easily solve this problem, but they require an extra layer. Below I depict a network with an extra layer (a 2-layer network). To depict the network, I use a repository available on my github.

1 2 3 4 5 6 7 | |

Notice that this network now has 5 total neurons. The two units at the bottom are the input layer. The activity of input units is the value of the inputs (same as the inputs in my previous post). The two units in the middle are the hidden layer. The activity of hidden units are calculated in the same manner as the output units from my previous post. The unit at the top is the output layer. The activity of this unit is found in the same manner as in my previous post, but the activity of the hidden units replaces the input units.

Thus, when the neural network makes its guess, the only difference is we have to compute an extra layer’s activity.

The goal of this network is for the output unit to have an activity of 0 when presented with an item from the blue group (inputs are same) and to have an activity of 1 when presented with an item from the red group (inputs are different).

One additional aspect of neural networks that I haven’t discussed is each non-input unit can have a bias. You can think about bias as a propensity for the unit to become active or not to become active. For instance, a unit with a postitive bias is more likely to be active than a unit with no bias.

I will implement bias as an extra line feeding into each unit. The weight of this line is the bias, and the bias line is always active, meaning this bias is always present.

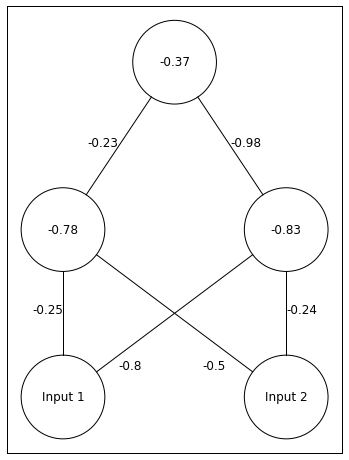

Below, I seed this 3-layer neural network with a random set of weights.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

Above we have out network. The depiction of and are confusing. -0.8 belongs to . -0.5 belongs to .

Lets go through one example of our network receiving an input and making a guess. Lets say the input is [0 1]. This means and . The correct answer in this case is 1.

First, we have to calculate ’s input. Remember we can write input as

with the a bias we can rewrite it as

Specifically for

Remember the first term in the equation above is the bias term. Lets see what this looks like in code.

1 2 3 | |

[-1.27669634 -1.07035845]

Note that by using np.dot, I can calculate both hidden unit’s input in a single line of code.

Next, we have to find the activity of units in the hidden layer.

I will translate input into activity with a logistic function, as I did in the previous post.

Lets see what this looks like in code.

1 2 3 4 5 | |

[ 0.2181131 0.25533492]

So far so good, the logistic function has transformed the negative inputs into values near 0.

Now we have to compute the output unit’s acitivity.

plugging in the numbers

Now the code for computing and the Output unit’s activity.

1 2 3 4 5 6 | |

net_Output

[-0.66626595]

Output

[ 0.33933346]

Okay, thats the network’s guess for one input…. no where near the correct answer (1). Let’s look at what the network predicts for the other input patterns. Below I create a feedfoward, 1-layer neural network and plot the neural nets’ guesses to the four input patterns.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | |

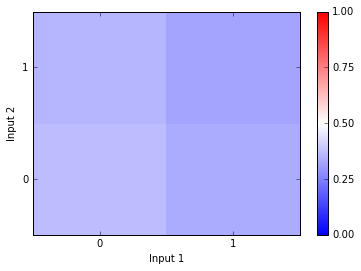

In the plot above, I have Input 1 on the x-axis and Input 2 on the y-axis. So if the Input is [0,0], the network produces the activity depicted in the lower left square. If the Input is [1,0], the network produces the activity depicted in the lower right square. If the network produces an output of 0, then the square will be blue. If the network produces an output of 1, then the square will be red. As you can see, the network produces all output between 0.25 and 0.5… no where near the correct answers.

So how do we update the weights in order to reduce the error between our guess and the correct answer?

First, we will do backpropogation between the output and hidden layers. This is exactly the same as backpropogation in the previous post.

In the previous post I described how our goal was to decrease error by changing the weights between units. This is the equation we used to describe changes in error with changes in the weights. The equation below expresses changes in error with changes to weights between the and the Output unit.

Now multiply this weight adjustment by the learning rate.

Finally, we apply the weight adjustment to .

Now lets do the same thing, but for both the weights and in the code.

1 2 3 4 5 6 7 8 | |

[[-0.21252673 -0.96033892 -0.29229558]]

The hidden layer changes things when we do backpropogation. Above, we computed the new weights using the output unit’s error. Now, we want to find how adjusting a weight changes the error, but this weight connects an input to the hidden layer rather than connecting to the output layer. This means we have to propogate the error backwards to the hidden layer.

We will describe backpropogation for the line connecting and as

Pretty similar. We just replaced Output with . The interpretation (starting with the final term and moving left) is that changing the changes ’s input. Changing ’s input changes ’s activity. Changing ’s activity changes the error. This last assertion (the first term) is where things get complicated. Lets take a closer look at this first term

Changing ’s activity changes changes the input to the Output unit. Changing the output unit’s input changes the error. hmmmm still not quite there yet. Lets look at how changes to the output unit’s input changes the error.

You can probably see where this is going. Changing the output unit’s input changes the output unit’s activity. Changing the output unit’s activity changes error. There we go.

Okay, this got a bit heavy, but here comes some good news. Compare the two terms of the equation above to the first two terms of our original backpropogation equation. They’re the same! Now lets look at (the second term from the first equation after our new backpropogation equation).

Again, I am glossing over how to derive these partial derivatives. For a more complete explantion, I recommend Chapter 8 of Rumelhart and McClelland’s PDP book. Nonetheless, this means we can take the output of our function delta_output multiplied by and we have the first term of our backpropogation equation! We want to be the weight used in the forward pass. Not the updated weight.

The second two terms from our backpropogation equation are the same as in our original backpropogation equation.

- this is specific to logistic activation functions.

and

Lets try and write this out.

It’s not short, but its doable. Let’s plug in the numbers.

Not too bad. Now lets see the code.

1 2 3 4 | |

[[-0.25119612 -0.50149299 -0.77809147]

[-0.80193714 -0.23946929 -0.84467792]]

Alright! Lets implement all of this into a single model and train the model on the XOR problem. Below I create a neural network that includes both a forward pass and an optional backpropogation pass.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | |

Okay, thats the network. Below, I train the network until its answers are very close to the correct answer.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

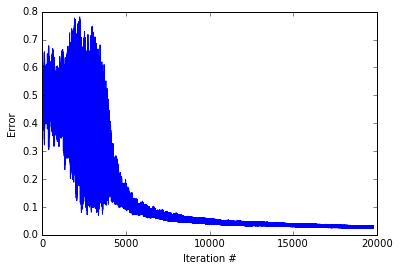

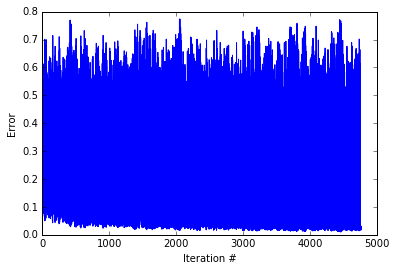

Lets see how error changed across training

1 2 3 4 | |

Really cool. The network start with volatile error - sometimes being nearly correct ans sometimes being completely incorrect. Then After about 5000 iterations, the network starts down the slow path of perfecting an answer scheme. Below, I create a plot depicting the networks’ activity for the different input patterns.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Again, the Input 1 value is on the x-axis and the Input 2 value is on the y-axis. As you can see, the network guesses 1 when the inputs are different and it guesses 0 when the inputs are the same. Perfect! Below I depict the network with these correct weights.

1 2 3 4 5 6 7 8 9 10 | |

The network finds a pretty cool solution. Both hidden units are relatively active, but one hidden unit sends a strong postitive signal and the other sends a strong negative signal. The output unit has a negative bias, so if neither input is on, it will have an activity around 0. If both Input units are on, then the hidden unit that sends a postitive signal will be inhibited, and the output unit will have activity near 0. Otherwise, the hidden unit with a positive signal gives the output unit an acitivty near 1.

This is all well and good, but if you try to train this network with random weights you might find that it produces an incorrect set of weights sometimes. This is because the network runs into a local minima. A local minima is an instance when any change in the weights would increase the error, so the network is left with a sub-optimal set of weights.

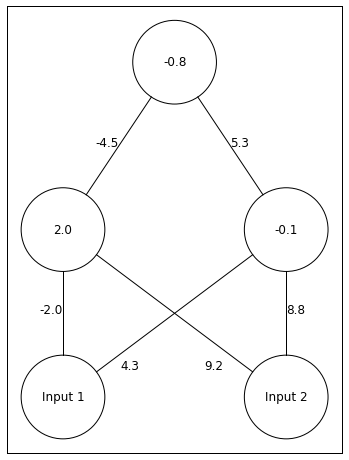

Below I hand-pick of set of weights that produce a local optima.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Using these weights as the start of the training set, lets see what the network will do with training.

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

As you can see the network never reduces error. Let’s see how the network answers to the different input patterns.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Looks like the network produces the correct answer in some cases but not others. The network is particularly confused when Inputs 2 is 0. Below I depict the weights after “training.” As you can see, they have not changed too much from where the weights started before training.

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

This network was unable to push itself out of the local optima. While local optima are a problem, they’re are a couple things we can do to avoid them. First, we should always train a network multiple times with different random weights in order to test for local optima. If the network continually finds local optima, then we can increase the learning rate. By increasing the learning rate, the network can escape local optima in some cases. This should be done with care though as too big of a learning rate can also prevent finding the global minima.

Alright, that’s it. Obviously the neural network behind alpha go is much more complex than this one, but I would guess that while alpha go is much larger the basic computations underlying it are similar.

Hopefully these posts have given you an idea for how neural networks function and why they’re so cool!